For the last century or so, the way drivers and passengers interact with a car has been largely the same. The steering wheel and pedal assembly and the gauges, switches and warning lights feel natural to most of us. But not only is interface technology evolving and showing us new ways to carry

out functions, the car itself is becoming so connected that we need to rethink completely how to interact with it.

With the advent of smart devices, expectations about the user experience have changed. Interfaces need to be intuitive and information needs to be displayed in a quickly and easily understandable format; all without the user having to consult a manual – it has to be intuitive and self-explanatory.

While the so called glass cockpit in planes - the switch from classic instruments and switches to multi-purpose screens - is a success story and widely adopted from general aviation aircraft to big airliners, it is a mixed bag in cars. Some dashboards have become downright clunky, overly complicated and thus hard to understand control panels. The time where you could adjust the volume on your car radio without so much as a glance is now gone.

Is there a way back to this beautiful simplicity without actually taking away the comfort functions car buyers nowadays expect?

Express yourself

Especially as long as cars aren’t driving themselves, the way you interact with them needs to be as effortless as possible and not distract from what is happening on the road. Buttons and switches have fulfilled those criteria for the longest time, but they still weren't ideal. There is opportunity for gestural and voice-recognition interfaces to be even more intuitive and safe for drivers to interact with while driving.

Maybe at first the gestures would have to be made on multi-touch capable screens already widely in use today. Instead of having to aim for a virtual button on that screen, you could draw a gesture on it that would adjust the music volume, A/C setting or even call your mom.

One step further would be to take away the need to physically touch a screen. As gaming consoles like the Xbox One and its improved Kinect prove, a combination of gesture and voice control is an effective way to control comfort functions.

If the car is “smart” enough, you could even train it with your own gestures and voice commands instead of teaching yourself the ones someone else thought of. With the proliferation of machine learning, this is something that will surely happen sooner rather than later.

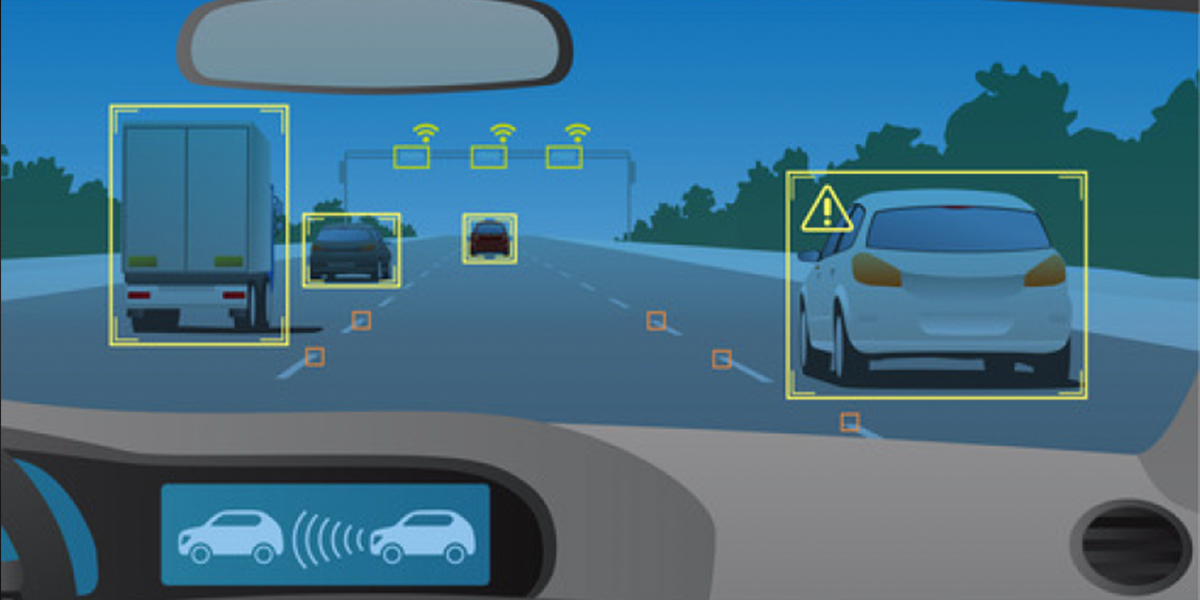

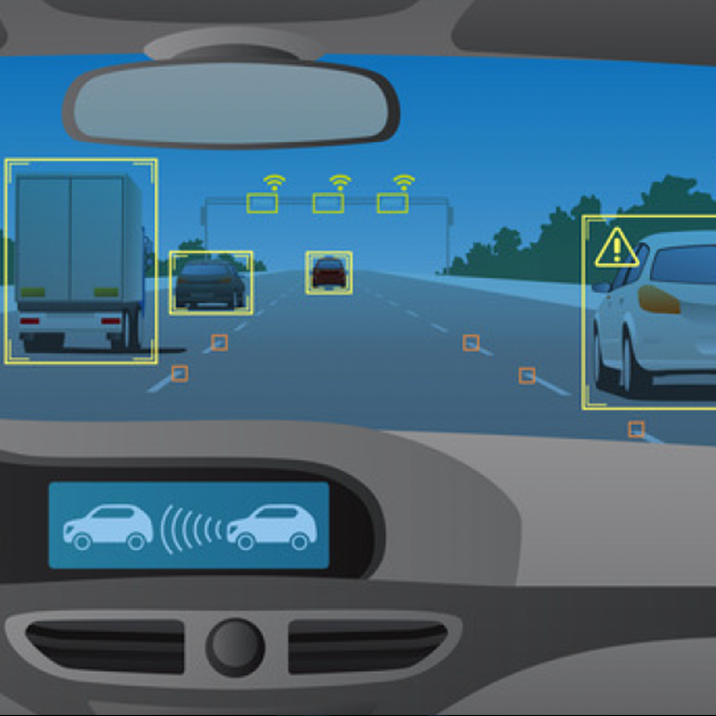

Not only would you be telling the car what you want, but the car would also inform you what is going on around you - in a much more sophisticated way than today.

Do not disturb

When having control of the car, Augmented Reality systems like HUDs will be able to highlight upcoming traffic signs, highlight bikers or pedestrians and display navigation info, seamlessly integrated into the driver’s view without them having to take their view off the road.

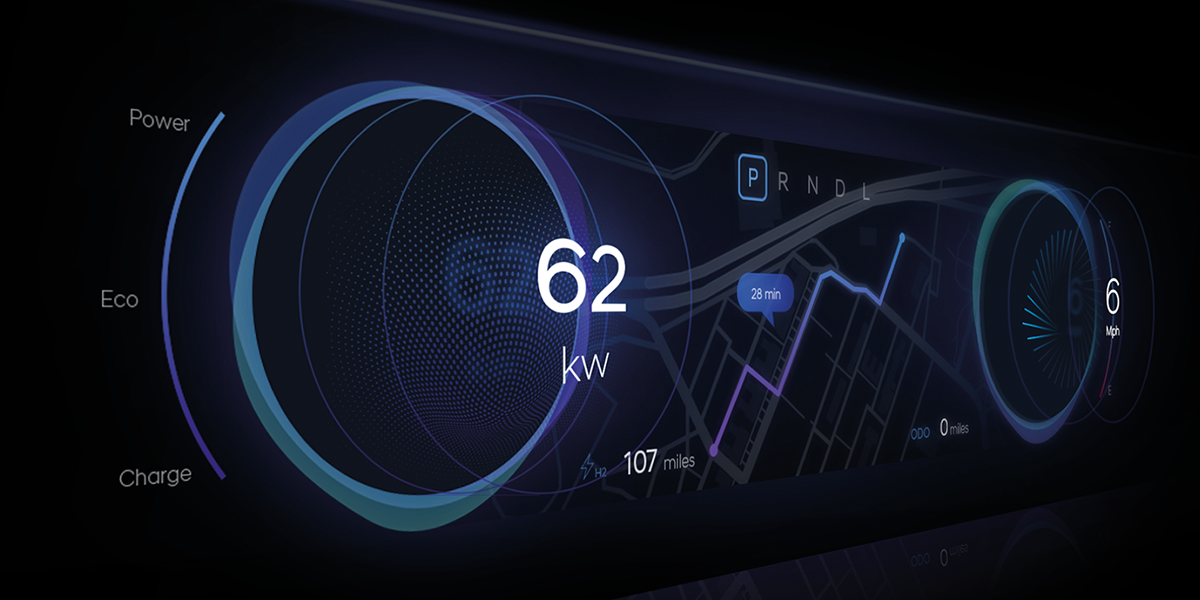

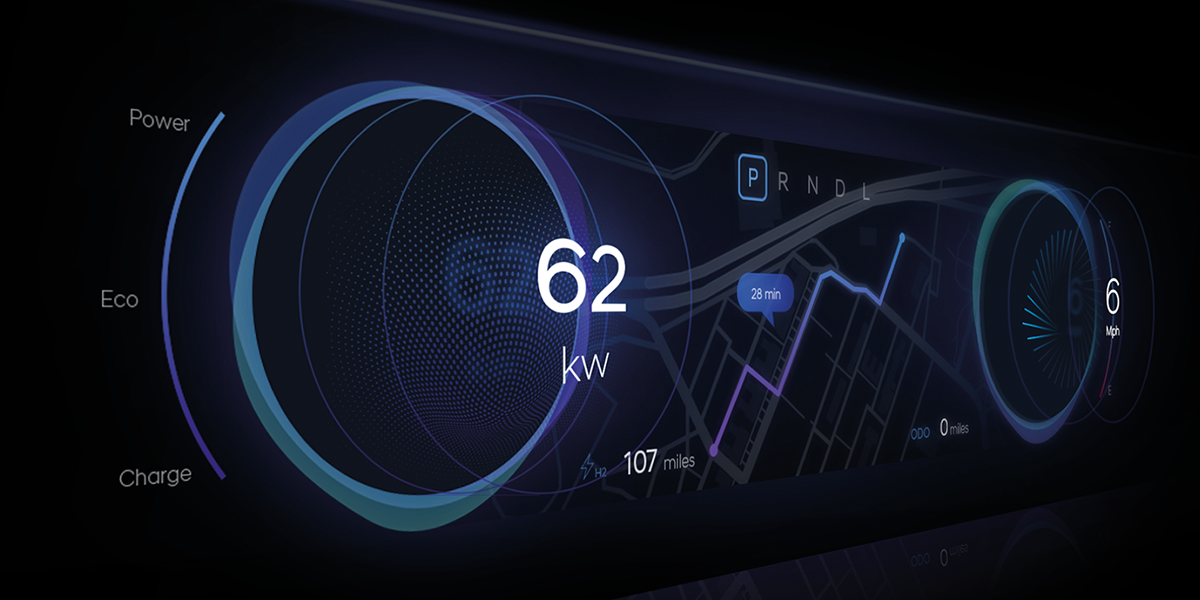

And when the car is driving itself, it can keep its passenger updated on how long the drive will take and where you are. If we consider a holographic display system, which doesn't rely on any physical screens, an overview of the current journey could be projected alongside your current Spotify playlist, so that the information can be accessed conveniently while being non-intrusive

Sending the right signals

Communication with your car will also start before you even sit down in you seat. Smart devices with near field communication capabilities can tell the vehicle not only that you are approaching and unlock it, but also tell it how to adjust your seat, what temperature to set and even how you would like your car interface to look.

So your dashboard might look differently from your partner’s, even though you share the car.

As autonomous vehicles and ride sharing services become more widespread and with the projected decrease in car ownership, this might become an increasingly popular function.

Looking even further

In the medium and short term, interfaces might switch to better screens with more intuitive touch, gesture and voice controls, Augmented Reality technologies like HUDs and even holographic displays – especially with the advent of the self-driving car – but what about a time even further into the future?

What if the ultimate human-machine-interface turns out to be the human itself? While it seems to be squarely in the realm of science fiction now, there might indeed be a time when people will be equipped with a direct neural interface that can connect to smart machinery to exchange information and commands.

Think!

You would not have to touch a button or even make a gesture, a simple thought would be enough to get the car going, and important information brought directly to your optical nerve to be integrated into your field of view.

People that are still driving themselves could get a feed of the vehicle’s telemetry data and get an even more direct “seat of pants” feel of their car then ever before.

Will we ever get to this point? Who knows, but somewhere, someone might already be working on it.

在微信中搜索faceui

或保存二维码在微信中打开